1. Introduction

This blog post is about Support Vector Machines (SVM), but not only about SVMs. SVMs belong to the class of classification algorithms and are used to separate one or more groups. In it’s pure form an SVM is a linear separator, meaning that SVMs can only separate groups using a a straight line. However ANY linear classifier can be transformed to a nonlinear classifier and SVMs are excellent to explain how this can be done. For a deeper introduction to the topic I recommend Tibshirani (2009), one can find a more detailed description including an derivation of the complete lagrangian there.

The general Idea of SVM is to separate two (or more) groups using a straight line (see Figure 1). However, in general there exits infinitely many lines that fulfill the task. So which one is the “correct” one? The SVM answers this question by choosing the line (or hyperplane if we suppose more than two features) which is most far away (the distance is denoted by ![]() ) from the nearest points within each group.

) from the nearest points within each group.

2. Mathematical Formulation

2.1 Separating Hyperplanes

Suppose multivariate data given by a pair ![]() where the explanatory variable

where the explanatory variable ![]() and the group coding

and the group coding ![]() . In the following we assume an iid. sample of size

. In the following we assume an iid. sample of size ![]() given by

given by ![]() .

.

Any separating hyperplane (which is a line if ![]() ) can therefore be described such that there exits some

) can therefore be described such that there exits some ![]() and

and

(1) ![]()

(2) ![]()

2.2 Support Vector Machines

In most cases the assumption that there exits a hyperplane that perfectly parts the data points is unrealistic. Usually some points will lie on the other side of the hyperplane. In that case (2) will not have a solution. The idea of SVM is now to introduce an parameter ![]() to fix this issue. In particular we modify (1) such that we require

to fix this issue. In particular we modify (1) such that we require

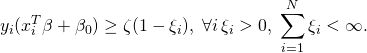

(3)

(4) ![]()

(5)

2.2 Nonlinear SVMs

The method we described so far can only handle data where the groups can be separate using some hyperplane (line). However in many cases the data to be considered is not suited to be separated using a linear method. See figure 2 for example, in figure 2 the groups are arranged in two circles with different radius. Any attempt to separate the groups using a line will thus fail. However any linear method can be transformed into a non-linear method by projecting the data ![]() into a higher dimensional space. This new data

into a higher dimensional space. This new data ![]() may then be separable by some linear method. In case of figure 2 a suitable projection is for example given by

may then be separable by some linear method. In case of figure 2 a suitable projection is for example given by ![]() ,

, ![]() maps the data onto a cone where the data can be separated using a hyperplane as to be seen in figure 3. However, there are some drawbacks using this method. First of all

maps the data onto a cone where the data can be separated using a hyperplane as to be seen in figure 3. However, there are some drawbacks using this method. First of all ![]() will in general be unknown. In our simple example we where lucky to find a suitable

will in general be unknown. In our simple example we where lucky to find a suitable ![]() , however concerning a more complicated data structure fining a suitable

, however concerning a more complicated data structure fining a suitable ![]() turns out to be very hard. Secondly, depending on the dataset, the dimension of

turns out to be very hard. Secondly, depending on the dataset, the dimension of ![]() needed to guarantee the existence of a separating hyperplane can become quite large and even infinite. Before we deal with this issues using kernels we will first have a look at the modified minimization problem (4) using

needed to guarantee the existence of a separating hyperplane can become quite large and even infinite. Before we deal with this issues using kernels we will first have a look at the modified minimization problem (4) using ![]() instead of

instead of ![]() .

.

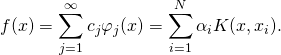

Let the nonlinear solution function given by ![]() . To overcome the need of the constant

. To overcome the need of the constant ![]() we introduce some penalty

we introduce some penalty ![]() and rewrite (4) as

and rewrite (4) as

(6) ![Rendered by QuickLaTeX.com \begin{equation*} min_{\beta_0,\beta} \sum_{i=1}^N [1- y_i f(x_i)]_+ \frac{\lambda}{2} ||\beta||^2. \end{equation*}](https://www.thebigdatablog.com/wp-content/ql-cache/quicklatex.com-99b1993130122dfa07145254e63821dc_l3.png)

The notation

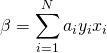

From (5) we already know, that a nonlinear solution function ![]() will have the form

will have the form

(7)

We can verify, that knowledge about

2.2.1 Kernels

We already get in touch with kernels when estimating densities or a regression. In this application Kernels are a way to reduce the infinite-dimensional problem to a finite dimensional optimization problem because the complexity of the optimization problem remains only dependent on the dimensionality of the input space and not of the feature space. To see this, let ![]() instead of looking at some particular function

instead of looking at some particular function ![]() , we consider the whole space of functions generated by the linear span of

, we consider the whole space of functions generated by the linear span of ![]() where

where ![]() is just one element in this space. To model this space in practice popular kernels are

is just one element in this space. To model this space in practice popular kernels are

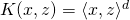

- linear kernel:

- polynomial kernel:

- radial kernel:

(8)

(9)

Thus we can write (6) in its general form as

(10)

According to (9) we can write down (10) using matrix notation

(11) ![]()

Pingback: Non-Linear Classification Methods in Spark – The Big Data Blog